Imagine a classroom where AI adjusts lessons to fit each student's learning style, predicts how they'll do academically, and grades assignments with great accuracy. Feels like the future, right? Well, it's already here! But as AI becomes more common in education, it brings along a bunch of ethical questions. Educators, policymakers, and tech folks have to think about things like privacy, data security, bias, and inequality. The ethics of AI in education is a tricky and changing field. As we move forward, understanding these ethics isn't just important—it's our responsibility. Let's look at the big ethical questions shaping education's future, including practical uses, challenges, and what's next. Whether you're a teacher, student, or just curious about how tech and learning mix, this discussion should be both enlightening and engaging!

Summary: This content discusses AI ethics in education, exploring practical applications, challenges, and future directions, while addressing frequently asked questions.

Understanding AI Ethics in Education

The Role of AI in Modern Education Systems

AI is transforming the way we learn, making education more personalized and efficient. By analyzing vast amounts of data, AI customizes learning materials for each student, ensuring they receive what they need, precisely when they need it. Platforms like Coursera and Duolingo leverage AI to tailor content based on individual learning speeds, offering personalized feedback. This approach provides a learning experience that fits each student and helps identify areas where they may require additional support.

AI tools are also revolutionizing classroom management. Tools like plagiarism checkers and automated grading systems allow teachers to focus more on teaching. For example, Gradescope utilizes AI to expedite the grading process, enabling teachers to provide feedback more quickly. Additionally, AI can predict student performance and identify those who may be struggling, allowing for timely intervention.

However, integrating AI in education comes with its challenges, particularly concerning ethical considerations.

Core Ethical Principles in AI-Powered Education

Transparency and Accountability in AI Systems

Transparency in AI operations builds trust. Schools must establish clear guidelines on AI usage, explaining its role and functionality in courses. It's crucial to document AI decision-making processes and the data it utilizes. An issue arises when teachers use AI without disclosure, while students remain unaware. Institutions like the Colorado Community College System are spearheading discussions on AI ethics to ensure transparency in AI usage.

Privacy and Data Protection in AI Education

AI's data collection capabilities raise significant privacy concerns. Often, students are unaware that their data might be used for research or shared. Schools must adhere to data protection laws such as GDPR in Europe or FERPA in the U.S., employing encryption, anonymizing data, and maintaining clear data policies.

Addressing Bias and Fairness in AI

AI can perpetuate biases present in the data it learns from, potentially affecting students unfairly. Schools are addressing the challenge of ensuring AI fairness. For example, Arkansas State University emphasizes a human-centered approach. Key steps include training AI on diverse data sets and regularly auditing for bias.

Balancing Autonomy and Teacher Roles with AI

While AI can assist teachers, it cannot replace them. There is a concern that excessive AI reliance might undermine teacher-student relationships. Institutions like Miami University promote discussions about AI, using it as a learning opportunity. AI should support teachers by providing tools that enhance their teaching, not replace their roles.

In conclusion, AI offers significant potential to enhance education, but it's crucial to address its ethical challenges thoughtfully. By adhering to these principles, schools can responsibly integrate AI, enhancing learning experiences while safeguarding rights and interests. Each principle addresses distinct yet interconnected issues, necessitating policies that balance technological advancements with student rights, fairness, and maintaining the human element in education.

AI Ethics: Practical Applications and Use Cases

AI Ethics in Personalized Learning

AI is revolutionizing education by tailoring learning experiences to individual students while adhering to ethical standards. These ethics emphasize:

- Beneficence

- Fairness

- Respect for autonomy

- Transparency

- Accountability

- Privacy protection

- Non-discrimination

- Risk-benefit analysis

These principles ensure that AI is deployed fairly in personalized learning. Transparency is crucial as it allows students and educators to comprehend AI's recommendations, fostering trust and informed decision-making.

Fairness prevents bias in AI systems, ensuring equal opportunities for all students. For example, an AI learning platform that clearly articulates its recommendations and undergoes regular bias assessments ensures equitable outcomes for everyone.

AI Ethics for Administrative Efficiency

AI significantly enhances the efficiency of school administrative tasks. Leading companies such as IBM, Google, and Microsoft have established ethical AI guidelines focusing on:

- Fairness

- Responsibility

- Transparency

- Privacy

These guidelines can be adapted for administrative AI systems to ensure responsible use. It's vital that AI decisions are auditable and verifiable.

Regulations like the US AI Bill of Rights emphasize the need to monitor bias, protect data privacy, and maintain clarity about AI's role in administrative tasks. Given the high validation costs and regulatory challenges, it's essential to ethically evaluate AI systems early to avoid costly adjustments later. For instance, a university might employ AI for admissions, incorporating fairness checks, transparent decision-making processes, and privacy safeguards to maintain ethical and efficient operations.

AI Ethics in Enhancing Inclusivity

AI is pivotal in creating more inclusive learning environments by promoting fairness and justice. AI systems should be designed with fairness, utilizing balanced datasets and continuous bias checks to prevent exclusion or harm to marginalized groups. Transparency and user control empower individuals from diverse backgrounds to understand and manage AI interactions, enhancing inclusivity.

Legal frameworks, such as those from the US Equal Employment Opportunity Commission, enforce standards that support inclusivity. Consider an AI recruitment tool; it is routinely reviewed for bias, provides clear decision rationales, and complies with anti-discrimination laws to promote workplace diversity.

In summary, ethical AI frameworks underscore the importance of fairness, transparency, responsibility, and privacy. These principles guide the application of AI in education, administration, and inclusivity to ensure its ethical deployment. Regulatory bodies and ethics boards play a critical role in preventing bias and safeguarding rights, highlighting the importance of early ethical reviews and continuous evaluations.

Challenges and Future Directions

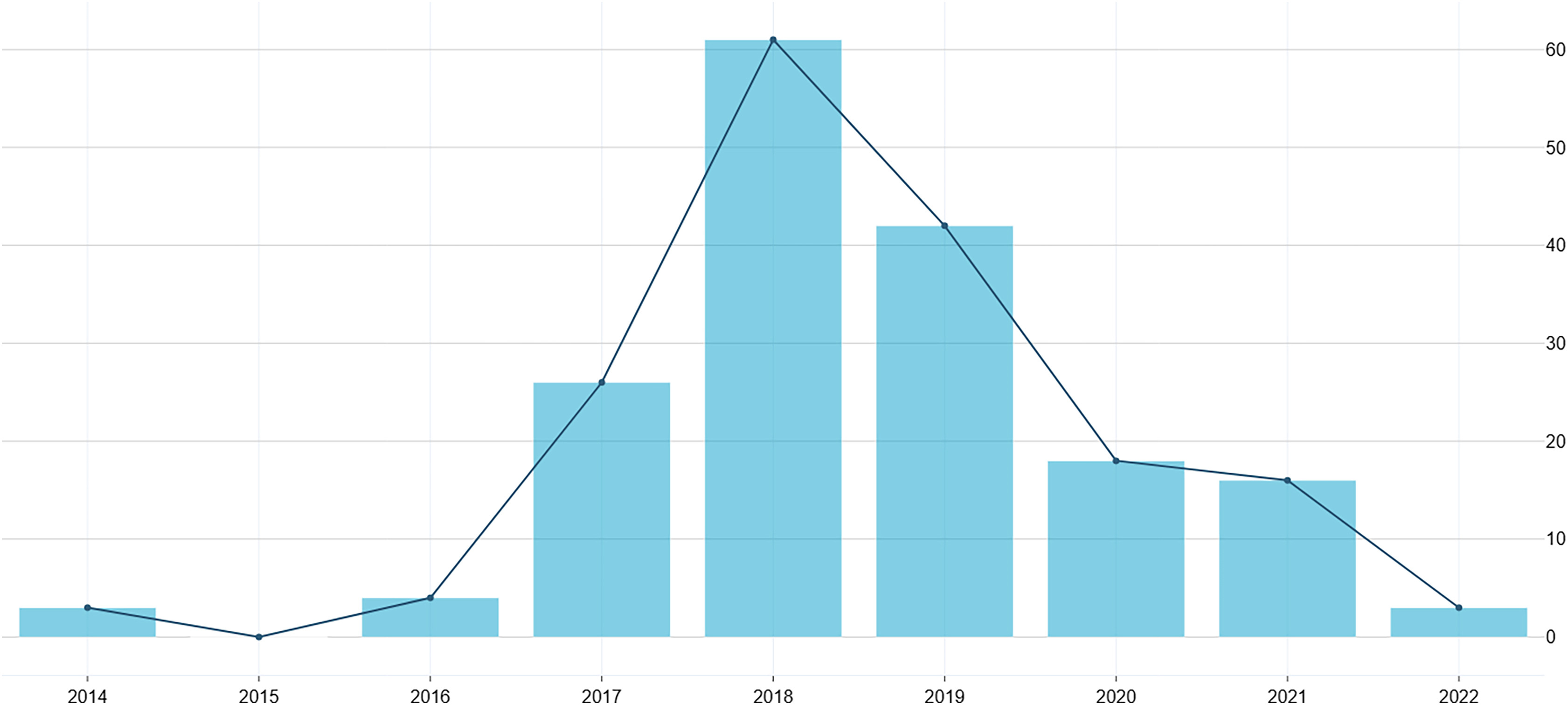

AI Ethics in Regulatory and Policy Development

AI technologies are evolving rapidly, and keeping up with regulations is a challenging task. The EU AI Act is an exemplary framework, establishing risk-based tiers with stringent rules for high-risk applications such as healthcare and law enforcement. This framework emphasizes clarity and accountability, setting a standard for AI regulations. Learn more about the EU AI Act.

In contrast, the U.S. adopts a sector-specific approach, with various agencies creating rules for industries like healthcare and finance, resulting in a more fragmented regulatory landscape. For more details, check out the US AI Guidelines.

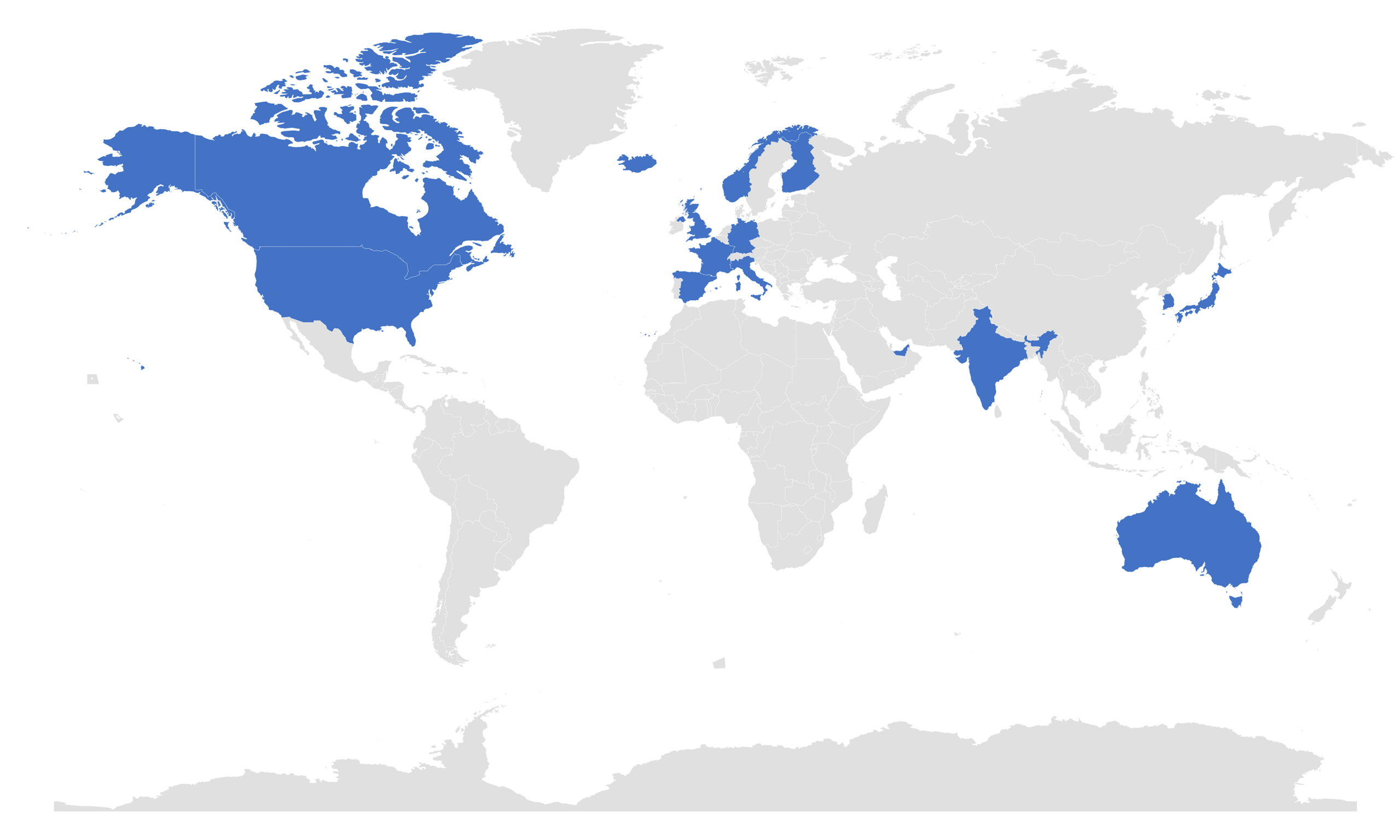

Globally, international collaboration is essential to maintain consistent AI regulations and ensure they uphold human rights and democratic values. Explore more on International Coordination.

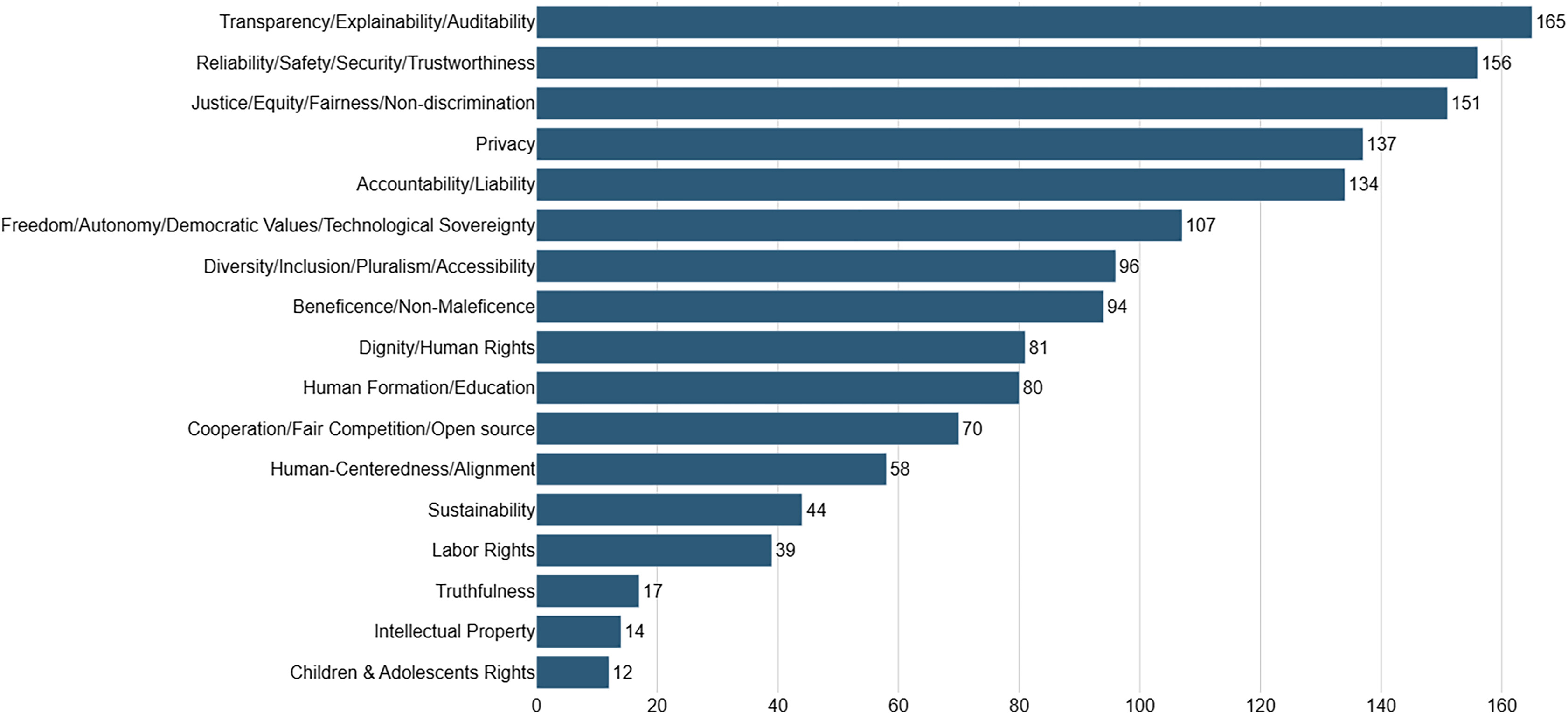

Ethical AI frameworks highlight the importance of transparency, accountability, inclusivity, and fairness to guide the responsible development of AI. For further insights, see Ethical AI Frameworks.

Additionally, AI systems should be subject to audits and checks to prevent conflicts with human rights and environmental health. Discover more about AI Oversight.

AI Ethics in Multi-Stakeholder Collaboration

Addressing AI ethics is a collective effort, requiring contributions from developers, users, ethicists, and policymakers. Multi-stakeholder governance is becoming the standard to ensure responsible AI development. Learn more about Multi-Stakeholder Governance.

Implementing inclusive design and assembling diverse teams can significantly reduce bias and enhance fairness throughout AI's development process. Find out more about Inclusive Design.

In sectors like healthcare, it's vital for AI developers, clinicians, and ethicists to collaborate to maintain patient trust and ensure equitable AI utilization. For more information, visit Healthcare Collaboration.

Effective collaboration fosters the creation of ethical AI frameworks, balancing innovation with the protection of human rights and democratic values. For more on ethical frameworks, see Ethical AI Frameworks.

Engaging all stakeholders is crucial for fair AI governance, addressing ethical challenges such as bias, transparency, and accountability. Learn more about Inclusive Governance.

FAQs on AI Ethics in Education

Ensuring Unbiased and Fair AI Systems in Schools

To keep AI systems in schools fair and unbiased, it's crucial to have the right structures in place. Schools should establish governance frameworks by appointing senior leaders who focus on the ethical use of AI. These leaders ensure oversight and accountability before any AI tools are implemented.

Key Steps to Ensure Fairness:

- Ethical AI Development: Create tools that emphasize fairness and avoid bias, especially in student grading.

- Regular Bias Checks: Conduct routine evaluations for bias in AI systems.

- Generative AI Features: Make opting in for these features standard, allowing schools to assess risks.

- Collaborative Approach: Involve educators, psychologists, ethicists, and legal experts to address bias and fairness comprehensively.

For example, a university might have an AI committee led by a senior official to review AI tools for bias, ensuring faculty agreement before using AI in classes. This alignment keeps AI tools consistent with educational goals and ethical standards. For more on ethical AI practices, visit Anthology.

Protecting Student Privacy in AI-Powered Education Systems

Protecting student privacy in AI-driven education systems requires clear rules regarding data collection, storage, and use. Schools need to safeguard personal information and be transparent about its usage.

Privacy Protection Measures:

- Regulatory Compliance: Follow regulations and set ethical guidelines to respect privacy in AI usage.

- Transparency: Be open about how AI uses student data and allow schools to control AI features.

- Education & Awareness: Educate staff and students about privacy risks and ethical AI use to foster a culture of protection.

For instance, a school district might have a policy requiring AI vendors to disclose data handling practices and obtain parental consent before collecting student data. This ensures privacy remains a priority and data is managed responsibly. More on privacy protection in AI can be found at TAO Testing.

The Importance of Transparency in AI Tools for Education

Transparency in AI tools used in education is critical for building trust and ensuring accountability. Students, parents, and educators need to understand how AI tools make decisions to feel confident in these systems.

Building Trust Through Transparency:

- Clear Communication: Explain what AI can and can't do, and how it uses data.

- Open Information: Keep staff and students informed about AI use and be transparent about AI features and risks.

For example, an educational platform might clarify how its AI grading system works and allow students to review and challenge AI-generated grades. This approach helps AI tools become a trusted and useful part of education. More on transparency can be found at Cornell University.